This bug was fixed in 4.0 version.

Inspired by a recent question in the discussion group, I bring you an example how to route touch events to the window which should handle it!

The bug - see it in action

First, let's show some code which will demostrate the issue:

using Microsoft.SPOT;

using Microsoft.SPOT.Input;

using Microsoft.SPOT.Presentation;

using Microsoft.SPOT.Presentation.Media;

using Microsoft.SPOT.Touch;

using TouchWindows

{

public class MyApplication : Application

{

public static MyApplication MyCurrent;

public static void Main()

{

MyCurrent = new MyApplication();

Touch.Initialize(MyCurrent);

MyCurrent.Run();

}

public MyApplication()

{

for (int i = 0; i < 5; i++)

new MyWindow

{

Index = i,

Height = SystemMetrics.ScreenHeight,

Width = SystemMetrics.ScreenWidth,

Background = new SolidColorBrush(ColorUtility.ColorFromRGB(

(byte)(255 * (i & 1)),

(byte)(127 * (i & 2)),

(byte)(63 * (i & 4))

)),

Visibility = Visibility.Visible,

};

}

}

public class MyWindow : Window

{

protected override void OnStylusDown(StylusEventArgs e)

{

// Topmost = false;

MyApplication.MyCurrent.Windows[(Index + 1) % MyApplication.MyCurrent.Windows.Count].Topmost = true;

}

public int Index;

}

This is pretty standard code how you can start up your application, similar to the project which is generated when you create a new windows application. When the application is instantiated, it creates 5 empty full-screen windows, which differ in background color. The windows are automatically assigned to the application and the first one is set as the application's MainWindow (all this is assured by the standard Window constructor). I also keep a static reference to the application instance (MyApplication.MyCurrent), just to have a nicer code, without the need of explicit casting all the time.

The main purpose of MyWindow is to bring the next window on top of others. If the Window.Tompost property was implemented properly (currently it always assumes you assign true to it), the class could have been pretty straightforward. However, with current implementation we have to introduce the Index field and set Topmost = true on the next window (Index + 1).

Windows are created in this order (value of Index):

If you run the application, you will see a blue window. This is because the blue window was the last one added. Now if you click it (of course guys, touch it!, I knew you were running this on hardware ;-)), you will see a red window. Huh? The reason is because the application's main window is (by default) the first window created (black), and only this one receives the touch events, even if it is not visible at all! The OnStyleDown handler of the black window will bring the next (red one) on top. If you touch it again, nothing will change, because the black one is still the one receiving the event:

So this is how the bug behaves.

The touch - how does it work

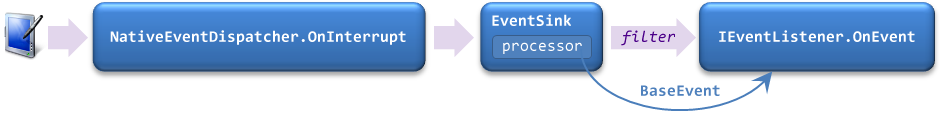

There is one very important line in the application's entry point: Touch.Initialize(MyCurrent). This method registers a class of IEventListener type as a touch event listener. Such list of listeners is maintained by the (kind of static) Microsoft.SPOT.EventSink class (inherits Microsoft.SPOT.Hardware.NativeEventDispatcher). When you touch the display, the touch driver uses the NativeEventDispatcher's OnInterrupt event to bring the event into managed world.

The EventSink actually maintains three lists: processors, filters and listeners, for each event category (gesture, network, storage, touch, etc.). Only one processor, only one filter and only one listener is supported for each category. If you or your hardware vendor introduces some custom event category, you can use any free number as the EventCategory. In that case, you usually have another static class (like NetworkChange or RemovableMedia) which contains managed events, to which you can attach multiple handlers. Unfortunately, this is not the case for touch events, but we will get into that in a moment. Processor's task is to extract 64 bits of native data (of which 8 bits are reserved for event category) and return an instance of Microsoft.SPOT.BaseEvent. If there is no registered processor for given event category, a GenericEvent is created, assuming the data represents X and Y screen coordinates. These event data are passed to the filter, which just returns true for continuing processing of the event, or false to ignore it. Such filter is for example used by the InkCanvas control to collect ink only within bounds of its surface. Filters and listeners use the same signature, resp. interface (there is IEventListener only), this is why the examples on this page need to return some boolean value. The result is not used anywhere in case of listeners, but this may change in the future. Listener is just a recipient of the event, much like a standard event handler is.

The Application class implements the IEventListener interface, so all WPF applications (including the MyApplication in example above) can receive events. This is used by the touch system, and calling Touch.Initialize(MyCurrent) just registers the application as a touch event listener. When you touch the display, the application receives the event, creates a Microsoft.SPOT.Input.InputReport and sends it to the WPF input system. In the case of touch events, it also sets the Microsoft.SPOT.Input.InputManager.StylusWindow to the control which will receive the WPF stylus events. Here is where the bug is, as it always sets this to its MainWindow instead of the top window:

public bool OnEvent(BaseEvent ev)

{

StylusEvent stylusEvent = (StylusEvent)ev;

UIElement capturedElement = Stylus.Captured;

if (capturedElement == null && MainWindow != null)

capturedElement = MainWindow.ChildElementFromPoint(stylusEvent.X, stylusEvent.Y);

InputManager.CurrentInputManager.StylusWindow = capturedElement ?? MainWindow;

Dispatcher.BeginInvoke(_inputCallback, // reference to InputProviderSite.ReportInput method

new object[] {

new RawStylusInputReport(null, stylusEvent.Time, stylusEvent.EventMessage, stylusEvent.X, stylusEvent.Y)

});

return true;

}

(the code is simplified, it handles other than stylus events, too)

The workaround - reflection required

Although the Application.OnEvent method is not virtual, it seems that the derived types are used when dereferencing interface methods, so you could just hide the original one using the keyword new and fix the behavior. This is, however, a dirty solution and will not be presented here. Let's do it professionally, universally and extensibly, using our own IEventListener implementation:

using System;

using Microsoft.SPOT;

using Microsoft.SPOT.Input;

using Microsoft.SPOT.Presentation;

public class TopmostListener : IEventListener

{

private Application _application; // for perfomance, cache everything

private InputManager _inputManager;

private ReportInputCallback _inputCallback;

public virtual void InitializeForEventSource()

{

_application = Application.Current;

if (_application == null)

throw new InvalidOperationException("Application required.");

_application.InitializeForEventSource(); // don't forget to initialize the application first

_inputManager = InputManager.CurrentInputManager;

_inputCallback = new ReportInputCallback(_inputManager.RegisterInputProvider(this).ReportInput);

}

public virtual bool OnEvent(BaseEvent ev)

{

StylusEvent stylusEvent = ev as StylusEvent;

if (stylusEvent == null)

return _application.OnEvent(ev); // we are not interested in fixing other events, so let it on the original implementation

UIElement capturedElement = Stylus.Captured;

if (capturedElement == null && _application.MainWindow != null)

capturedElement = GetTopmostWindow().ChildElementFromPoint(stylusEvent.X, stylusEvent.Y);

_inputManager.StylusWindow = capturedElement ?? GetTopmostWindow();

_application.Dispatcher.BeginInvoke(_inputCallback, // reference to InputProviderSite.ReportInput method

new object[] {

new RawStylusInputReport(null, stylusEvent.Time, stylusEvent.EventMessage, stylusEvent.X, stylusEvent.Y)

});

return true;

}

public Window GetTopmostWindow()

{

...

}

private delegate bool ReportInputCallback(InputReport inputReport);

}

Usage of this listener is pretty easy, just change the entry point to:

public static void Main()

{

MyCurrent = new MyApplication();

Touch.Initialize(new TopmostListener());

MyCurrent.Run();

}

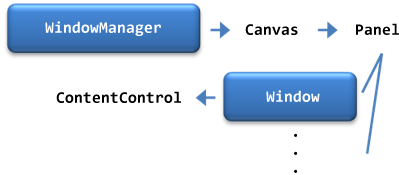

Now the only nasty trick which remains is to find the top most window. Let's see how the window management in .NET Micro Framework works. All windows are kept at two places - in the application and in the window manager. The application keeps one list for windows belonging to the application and one for others, although I don't see any reason for this in current framework version. The (kind of static) window manager keeps list of all the windows created on the device, and it also layouts and renders the windows itself, as it is a simple Canvas control:

So in order to find the top most window, we have to find the last logical children of the WindowManager control (this one is rendered last, so appears on top of the others). If you set Topmost to true, the window manager just moves the window to the end of its children collection. If you are familiar with WPF on .NET Framework, you already know what we need - a logical tree helper to walk through the controls tree. We don't have any in the .NET Micro Framework, so we have to create one. The drawback of creating our own tree helper is that it does not have access to the framework internal properties, so we do need a help of reflection to get the logical children:

using System.Reflection;

using Microsoft.SPOT.Presentation;

public static class LogicalTreeHelper

{

private static FieldInfo _logicalChildren = typeof(UIElement).GetField("_logicalChildren", BindingFlags.NonPublic | BindingFlags.Instance);

public static UIElementCollection GetChildren(UIElement element)

{

return (UIElementCollection)_logicalChildren.GetValue(element);

}

public static UIElement GetParent(UIElement element)

{

return element.Parent;

}

}

Now it's obvious how to get the very top window:

public Window GetTopmostWindow()

{

UIElementCollection windows = LogicalTreeHelper.GetChildren(WindowManager.Instance);

if (windows == null)

return _application.MainWindow; // revert to the framework's default behavior

int i = windows.Count; // visibility check,

while (--i >= 0) // routing touch events to invisible windows does not make sense either

{

Window window = (Window)windows[i];

if (window.Visibility == Visibility.Visible)

return window;

}

return _application.MainWindow; // none visible window found, revert to the default behavior

}

And we are finished. If you now run the application, you can cycle through the windows as expected, only the top one receives the touch event:

PS: This unfortunately does not fix the Microsoft.SPOT.Input.Stylus.Capture methods, which remain to be available for application's main window children only. However, if you are creating your own listener, you can create and use your own capture class anyway.